publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- MILo

MILo: Mesh-In-the-Loop Gaussian Splatting for Detailed and Efficient Surface ReconstructionAntoine Guédon, Diego Gomez, Nissim Maruani, and 3 more authorsACM Transactions on Graphics, 2025

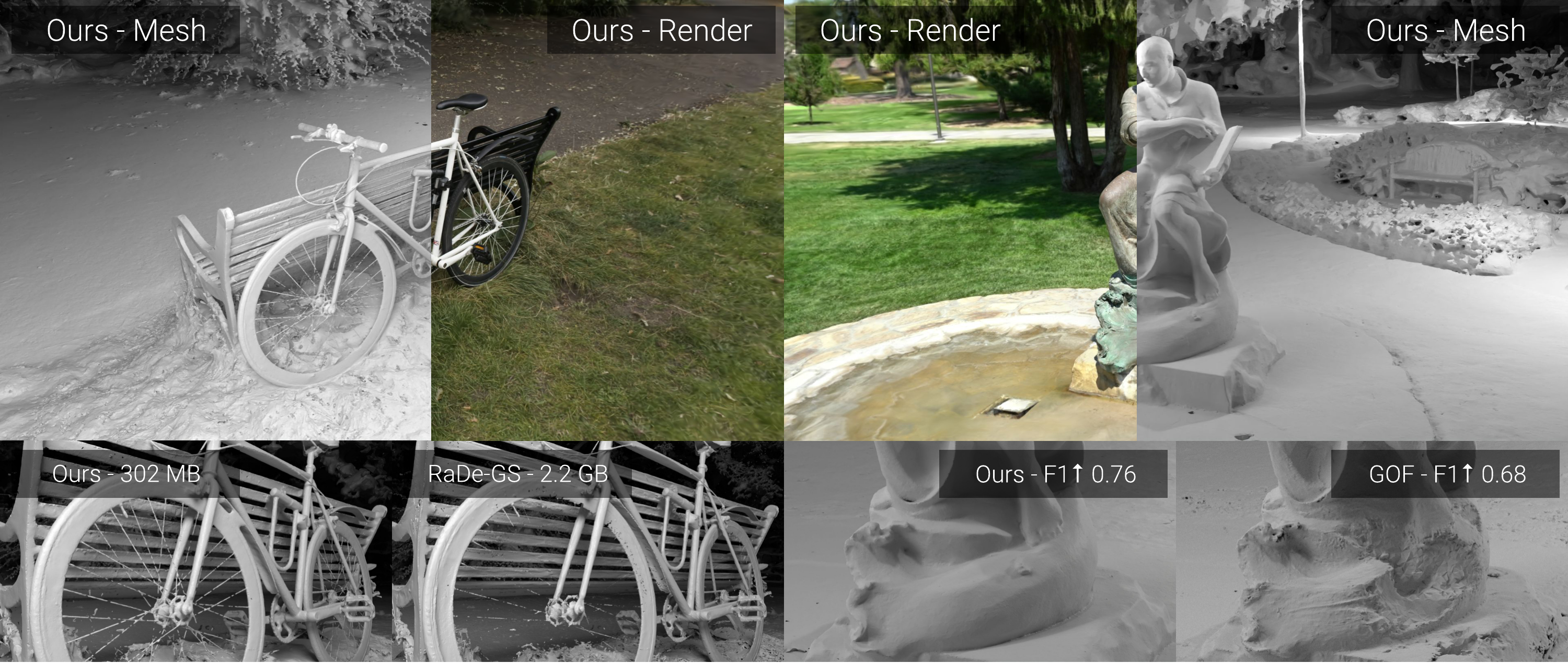

MILo: Mesh-In-the-Loop Gaussian Splatting for Detailed and Efficient Surface ReconstructionAntoine Guédon, Diego Gomez, Nissim Maruani, and 3 more authorsACM Transactions on Graphics, 2025Our method introduces a novel differentiable mesh extraction framework that operates during the optimization of 3D Gaussian Splatting representations. At every training iteration, we differentiably extract a mesh—including both vertex locations and connectivity—only from Gaussian parameters. This enables gradient flow from the mesh to Gaussians, allowing us to promote bidirectional consistency between volumetric (Gaussians) and surface (extracted mesh) representations. This approach guides Gaussians toward configurations better suited for surface reconstruction, resulting in higher quality meshes with significantly fewer vertices. Our framework can be plugged into any Gaussian splatting representation, increasing performance while generating an order of magnitude fewer mesh vertices. MILo makes the reconstructions more practical for downstream applications like physics simulations and animation.

@article{guedon2025milo, author = {Gu{\'e}don, Antoine and Gomez, Diego and Maruani, Nissim and Gong, Bingchen and Drettakis, George and Ovsjanikov, Maks}, title = {MILo: Mesh-In-the-Loop Gaussian Splatting for Detailed and Efficient Surface Reconstruction}, journal = {ACM Transactions on Graphics}, year = {2025}, } - ZeroKey

ZeroKey: Point-Level Reasoning and Zero-Shot 3D Keypoint Detection from Large Language ModelsBingchen Gong, Diego Gomez, Abdullah Hamdi, and 4 more authorsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Oct 2025

ZeroKey: Point-Level Reasoning and Zero-Shot 3D Keypoint Detection from Large Language ModelsBingchen Gong, Diego Gomez, Abdullah Hamdi, and 4 more authorsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Oct 2025We propose a novel zero-shot approach for keypoint detection on 3D shapes. Point-level reasoning on visual data is challenging as it requires precise localization capability, posing problems even for powerful models like DINO or CLIP. Traditional methods for 3D keypoint detection rely heavily on annotated 3D datasets and extensive supervised training, limiting their scalability and applicability to new categories or domains. In contrast, our method utilizes the rich knowledge embedded within Multi-Modal Large Language Models (MLLMs). Specifically, we demonstrate, for the first time, that pixel-level annotations used to train recent MLLMs can be exploited for both extracting and naming salient keypoints on 3D models without any ground truth labels or supervision. Experimental evaluations demonstrate that our approach achieves competitive performance on standard benchmarks compared to supervised methods, despite not requiring any 3D keypoint annotations during training. Our results highlight the potential of integrating language models for localized 3D shape understanding. This work opens new avenues for cross-modal learning and underscores the effectiveness of MLLMs in contributing to 3D computer vision challenges.

@inproceedings{Gong_2025_ICCV, author = {Gong, Bingchen and Gomez, Diego and Hamdi, Abdullah and Eldesokey, Abdelrahman and Abdelreheem, Ahmed and Wonka, Peter and Ovsjanikov, Maks}, title = {ZeroKey: Point-Level Reasoning and Zero-Shot 3D Keypoint Detection from Large Language Models}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}, month = oct, year = {2025}, pages = {22089-22099}, } - FourieRF

FourieRF: Few-Shot NeRFs via Progressive Fourier Frequency ControlDiego Gomez, Bingchen Gong, and Maks OvsjanikovIn 2025 International Conference on 3D Vision (3DV), Mar 2025

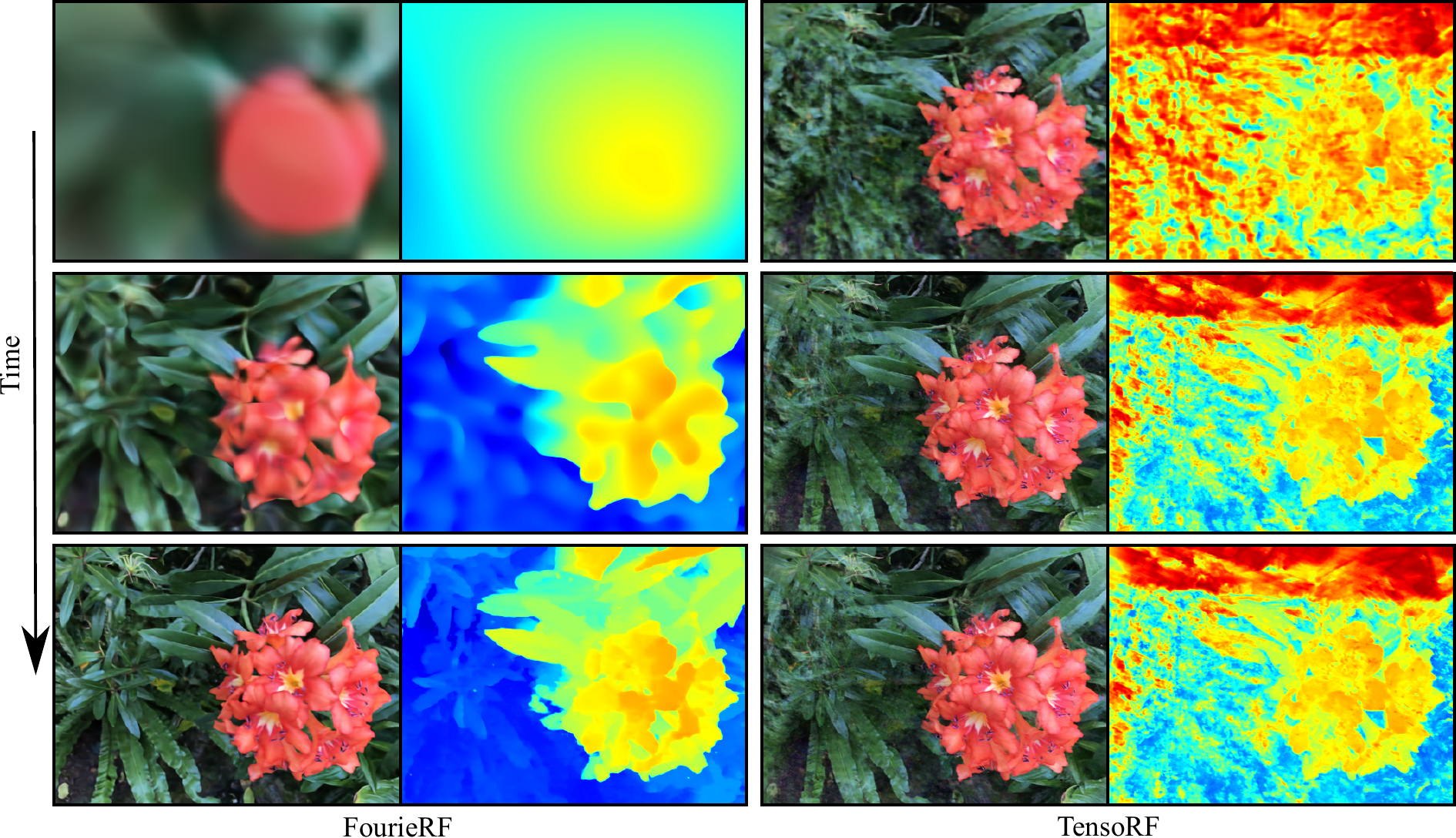

FourieRF: Few-Shot NeRFs via Progressive Fourier Frequency ControlDiego Gomez, Bingchen Gong, and Maks OvsjanikovIn 2025 International Conference on 3D Vision (3DV), Mar 2025We present a novel approach for few-shot NeRF estimation, aimed at avoiding local artifacts and capable of efficiently reconstructing real scenes. In contrast to previous methods that rely on pre-trained modules or various data-driven priors that only work well in specific scenarios, our method is fully generic and is based on controlling the frequency of the learned signal in the Fourier domain. We observe that in NeRF learning methods, high-frequency artifacts often show up early in the optimization process, and the network struggles to correct them due to the lack of dense supervision in few-shot cases. To counter this, we introduce an explicit curriculum training procedure, which progressively adds higher frequencies throughout optimization, thus favoring global, low-frequency signals initially, and only adding details later. We represent the radiance fields using a grid-based model and introduce an efficient approach to control the frequency band of the learned signal in the Fourier domain. Therefore our method achieves faster reconstruction and better rendering quality than purely MLP-based methods. We show that our approach is general and is capable of producing high-quality results on real scenes, at a fraction of the cost of competing methods. Our method opens the door to efficient and accurate scene acquisition in the few-shot NeRF setting.

@inproceedings{11125673, author = {Gomez, Diego and Gong, Bingchen and Ovsjanikov, Maks}, booktitle = {2025 International Conference on 3D Vision (3DV)}, title = {FourieRF: Few-Shot NeRFs via Progressive Fourier Frequency Control}, year = {2025}, volume = {}, number = {}, pages = {607-615}, keywords = {Training;Learning systems;Three-dimensional displays;Costs;Frequency-domain analysis;Estimation;Neural radiance field;Rendering (computer graphics);Frequency control;Optimization}, doi = {10.1109/3DV66043.2025.00061}, issn = {2475-7888}, month = mar, } - GS-Stitching

Towards Realistic Example-based Modeling via 3D Gaussian StitchingXinyu Gao, Ziyi Yang, Bingchen Gong, and 3 more authorsIn 2025 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2025

Towards Realistic Example-based Modeling via 3D Gaussian StitchingXinyu Gao, Ziyi Yang, Bingchen Gong, and 3 more authorsIn 2025 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Jun 2025Using parts of existing models to rebuild new models, commonly termed as example-based modeling, is a classical methodology in the realm of computer graphics. Previous works mostly focus on shape composition, making them very hard to use for realistic composition of 3D objects captured from real-world scenes. This leads to combining multiple NeRFs into a single 3D scene to achieve seamless appearance blending. However, the current SeamlessNeRF method struggles to achieve interactive editing and harmonious stitching for real-world scenes due to its gradient-based strategy and grid-based representation. To this end, we present an example-based modeling method that combines multiple Gaussian fields in a point-based representation using sample-guided synthesis. Specifically, as for composition, we create a GUI to segment and transform multiple fields in real time, easily obtaining a semantically meaningful composition of models represented by 3D Gaussian Splatting (3DGS). For texture blending, due to the discrete and irregular nature of 3DGS, straightforwardly applying gradient propagation as SeamlssNeRF is not supported. Thus, a novel sampling-based cloning method is proposed to harmonize the blending while preserving the original rich texture and content. Our workflow consists of three steps: 1) real-time segmentation and transformation of 3DGS using a well-tailored GUI, 2) KNN analysis to identify boundary points in the intersecting area between the source and target models, and 3) two-phase optimization of the target model using sampling-based cloning and gradient constraints. Extensive experimental results validate that our approach significantly outperforms previous works in realistic synthesis, demonstrating its practicality.

@inproceedings{11093710, author = {Gao, Xinyu and Yang, Ziyi and Gong, Bingchen and Han, Xiaoguang and Yang, Sipeng and Jin, Xiaogang}, booktitle = {2025 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, title = {Towards Realistic Example-based Modeling via 3D Gaussian Stitching}, year = {2025}, volume = {}, number = {}, pages = {26597-26607}, keywords = {Solid modeling, Analytical models, Three-dimensional displays, Shape, Computational modeling, Cloning, Transforms, Real-time systems, Optimization, Graphical user interfaces, gaussian splatting, composition, example-based modeling}, doi = {10.1109/CVPR52734.2025.02477}, issn = {2575-7075}, month = jun, url = {https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=11093710&isnumber=11091608} }

2024

- Deform3DGS

Deform3DGS: Flexible Deformation for Fast Surgical Scene Reconstruction with Gaussian SplattingShuojue Yang, Qian Li, Daiyun Shen, and 3 more authorsIn proceedings of Medical Image Computing and Computer Assisted Intervention – MICCAI 2024, Oct 2024

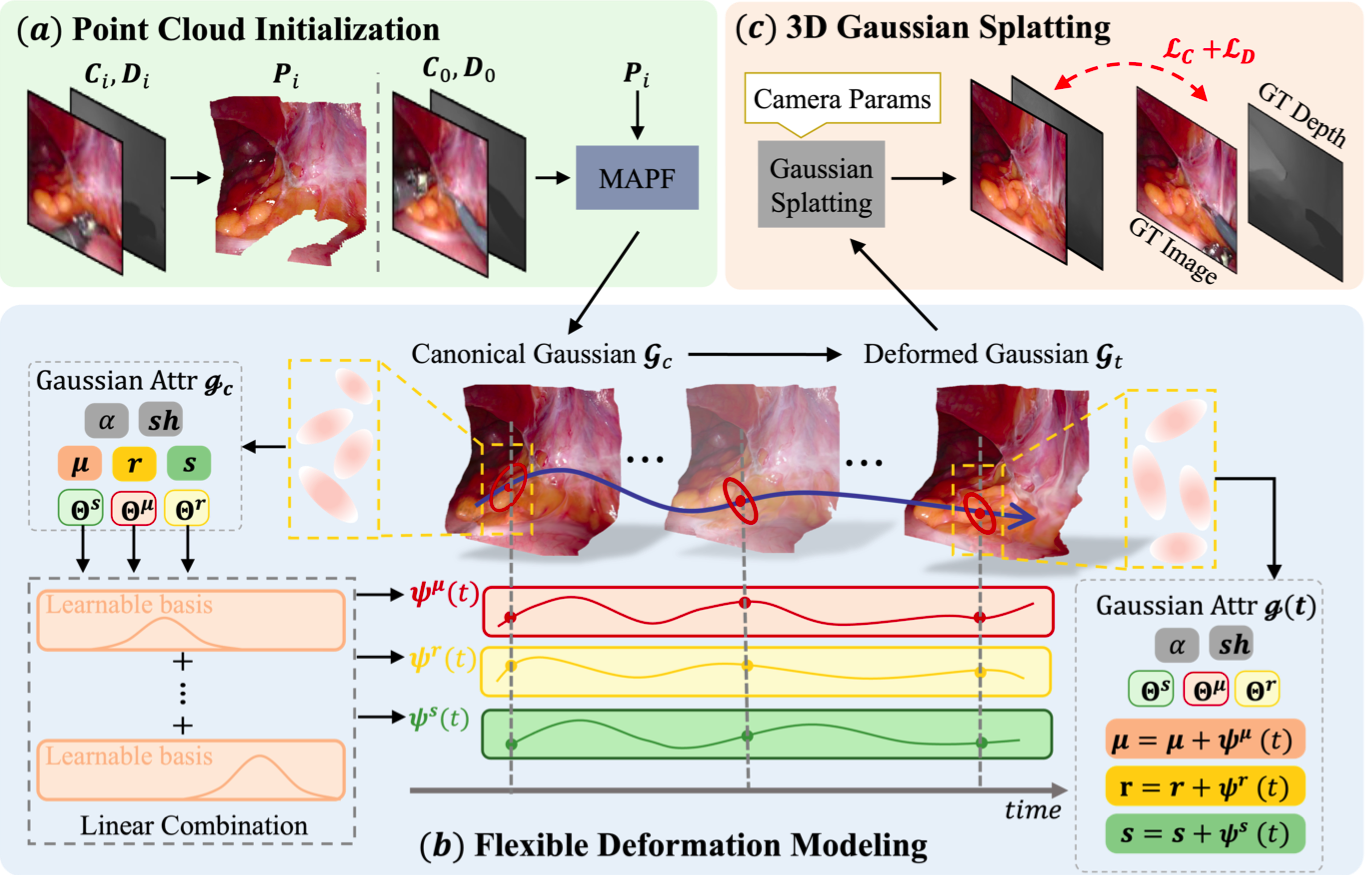

Deform3DGS: Flexible Deformation for Fast Surgical Scene Reconstruction with Gaussian SplattingShuojue Yang, Qian Li, Daiyun Shen, and 3 more authorsIn proceedings of Medical Image Computing and Computer Assisted Intervention – MICCAI 2024, Oct 2024Tissue deformation poses a key challenge for accurate surgical scene reconstruction. Despite yielding high reconstruction quality, existing methods suffer from slow rendering speeds and long training times, limiting their intraoperative applicability. Motivated by recent progress in 3D Gaussian Splatting, an emerging technology in real-time 3D rendering, this work presents a novel fast reconstruction framework, termed Deform3DGS, for deformable tissues during endoscopic surgery. Specifically, we introduce 3D GS into surgical scenes by integrating a point cloud initialization to improve reconstruction. Furthermore, we propose a novel flexible deformation modeling scheme (FDM) to learn tissue deformation dynamics at the level of individual Gaussians. Our FDM can model the surface deformation with efficient representations, allowing for real-time rendering performance. More importantly, FDM significantly accelerates surgical scene reconstruction, demonstrating considerable clinical values, particularly in intraoperative settings where time efficiency is crucial. Experiments on DaVinci robotic surgery videos indicate the efficacy of our approach, showcasing superior reconstruction fidelity PSNR: (37.90) and rendering speed (338.8 FPS) while substantially reducing training time to only 1 minute/scene.

@inproceedings{Yan_Deform3DGS_MICCAI2024, author = {Yang, Shuojue and Li, Qian and Shen, Daiyun and Gong, Bingchen and Dou, Qi and Jin, Yueming}, title = {Deform3DGS: Flexible Deformation for Fast Surgical Scene Reconstruction with Gaussian Splatting}, booktitle = {proceedings of Medical Image Computing and Computer Assisted Intervention -- MICCAI 2024}, year = {2024}, publisher = {Springer Nature Switzerland}, volume = {LNCS 15006}, month = oct, pages = {132--142}, } - BilarfPro

Bilateral Guided Radiance Field ProcessingYuehao Wang, Chaoyi Wang, Bingchen Gong, and 1 more authorACM Trans. Graph., Jul 2024

Bilateral Guided Radiance Field ProcessingYuehao Wang, Chaoyi Wang, Bingchen Gong, and 1 more authorACM Trans. Graph., Jul 2024Neural Radiance Fields (NeRF) achieves unprecedented performance in synthesizing novel view synthesis, utilizing multi-view consistency. When capturing multiple inputs, image signal processing (ISP) in modern cameras will independently enhance them, including exposure adjustment, color correction, local tone mapping, etc. While these processings greatly improve image quality, they often break the multi-view consistency assumption, leading to "floaters" in the reconstructed radiance fields. To address this concern without compromising visual aesthetics, we aim to first disentangle the enhancement by ISP at the NeRF training stage and re-apply user-desired enhancements to the reconstructed radiance fields at the finishing stage. Furthermore, to make the re-applied enhancements consistent between novel views, we need to perform imaging signal processing in 3D space (i.e. "3D ISP"). For this goal, we adopt the bilateral grid, a locally-affine model, as a generalized representation of ISP processing. Specifically, we optimize per-view 3D bilateral grids with radiance fields to approximate the effects of camera pipelines for each input view. To achieve user-adjustable 3D finishing, we propose to learn a low-rank 4D bilateral grid from a given single view edit, lifting photo enhancements to the whole 3D scene. We demonstrate our approach can boost the visual quality of novel view synthesis by effectively removing floaters and performing enhancements from user retouching.

@article{10.1145/3658148, author = {Wang, Yuehao and Wang, Chaoyi and Gong, Bingchen and Xue, Tianfan}, title = {Bilateral Guided Radiance Field Processing}, year = {2024}, issue_date = {July 2024}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {43}, number = {4}, issn = {0730-0301}, url = {https://doi.org/10.1145/3658148}, doi = {10.1145/3658148}, journal = {ACM Trans. Graph.}, month = jul, articleno = {148}, numpages = {13}, keywords = {neural radiance fields, neural rendering, bilateral grid, 3D editing}, } - EndoNeRF-Sim

Efficient EndoNeRF reconstruction and its application for data-driven surgical simulationYuehao Wang, Bingchen Gong, Yonghao Long, and 2 more authorsInternational Journal of Computer Assisted Radiology and Surgery, May 2024

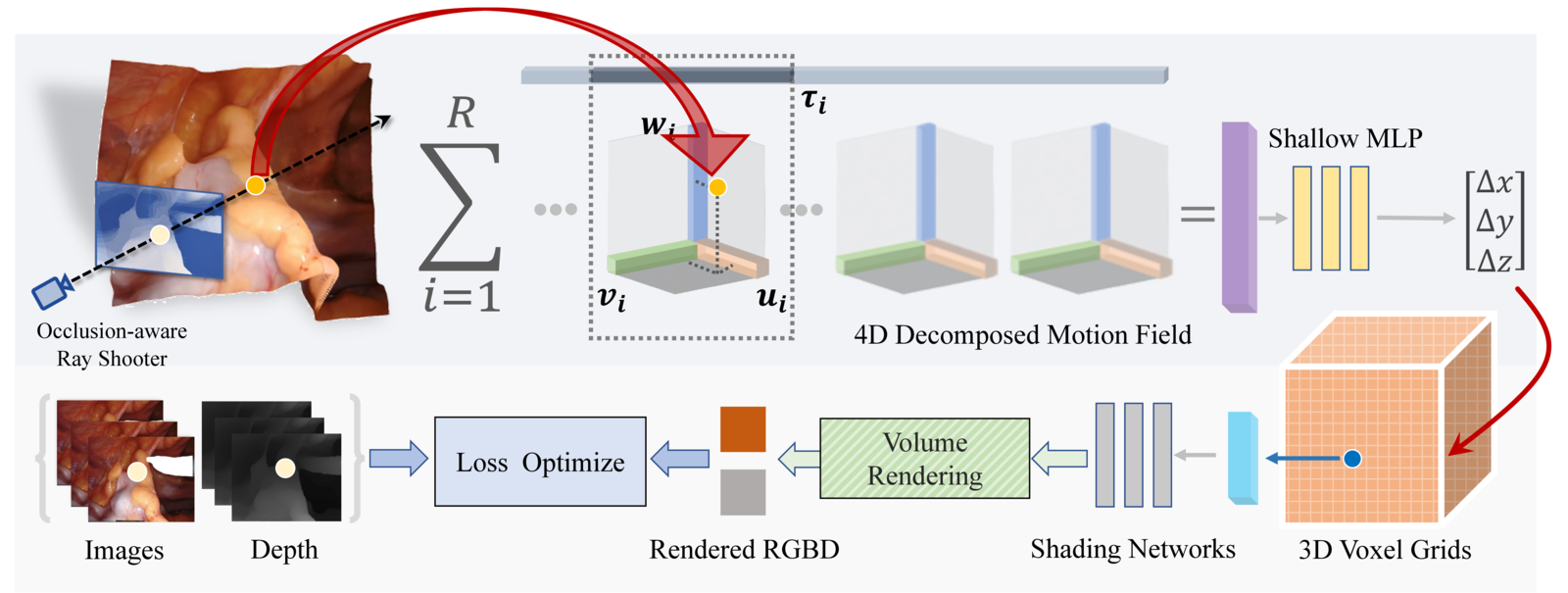

Efficient EndoNeRF reconstruction and its application for data-driven surgical simulationYuehao Wang, Bingchen Gong, Yonghao Long, and 2 more authorsInternational Journal of Computer Assisted Radiology and Surgery, May 2024The healthcare industry has a growing need for realistic modeling and efficient simulation of surgical scenes. With effective models of deformable surgical scenes, clinicians are able to conduct surgical planning and surgery training on scenarios close to real-world cases. However, a significant challenge in achieving such a goal is the scarcity of high-quality soft tissue models with accurate shapes and textures. To address this gap, we present a data-driven framework that leverages emerging neural radiance field technology to enable high-quality surgical reconstruction and explore its application for surgical simulations.

@article{Wang2024_EndoNeRF, author = {Wang, Yuehao and Gong, Bingchen and Long, Yonghao and Fan, Siu Hin and Dou, Qi}, title = {Efficient EndoNeRF reconstruction and its application for data-driven surgical simulation}, journal = {International Journal of Computer Assisted Radiology and Surgery}, year = {2024}, month = may, volume = {19}, number = {5}, pages = {821--829}, issn = {1861-6429}, doi = {10.1007/s11548-024-03114-1}, url = {https://doi.org/10.1007/s11548-024-03114-1}, }

2023

- RecolorNeRF

RecolorNeRF: Layer Decomposed Radiance Fields for Efficient Color Editing of 3D ScenesBingchen Gong, Yuehao Wang, Xiaoguang Han, and 1 more authorIn Proceedings of the 31st ACM International Conference on Multimedia, Ottawa ON, Canada, May 2023

RecolorNeRF: Layer Decomposed Radiance Fields for Efficient Color Editing of 3D ScenesBingchen Gong, Yuehao Wang, Xiaoguang Han, and 1 more authorIn Proceedings of the 31st ACM International Conference on Multimedia, Ottawa ON, Canada, May 2023Radiance fields have gradually become a main representation of media. Although its appearance editing has been studied, how to achieve view-consistent recoloring in an efficient manner is still under explored. We present RecolorNeRF, a novel user-friendly color editing approach for the neural radiance fields. Our key idea is to decompose the scene into a set of pure-colored layers, forming a palette. By this means, color manipulation can be conducted by altering the color components of the palette directly. To support efficient palette-based editing, the color of each layer needs to be as representative as possible. In the end, the problem is formulated as an optimization problem, where the layers and their blending weights are jointly optimized with the NeRF itself. Extensive experiments show that our jointly-optimized layer decomposition can be used against multiple backbones and produce photo-realistic recolored novel-view renderings. We demonstrate that RecolorNeRF outperforms baseline methods both quantitatively and qualitatively for color editing even in complex real-world scenes.

@inproceedings{10.1145/3581783.3611957, author = {Gong, Bingchen and Wang, Yuehao and Han, Xiaoguang and Dou, Qi}, title = {RecolorNeRF: Layer Decomposed Radiance Fields for Efficient Color Editing of 3D Scenes}, year = {2023}, isbn = {9798400701085}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3581783.3611957}, doi = {10.1145/3581783.3611957}, booktitle = {Proceedings of the 31st ACM International Conference on Multimedia}, pages = {8004–8015}, numpages = {12}, keywords = {recoloring, neural rendering, radiance fields, palettes, visual editing}, location = {Ottawa ON, Canada}, series = {MM '23}, } - SeamlessNeRF

SeamlessNeRF: Stitching Part NeRFs with Gradient PropagationBingchen Gong, Yuehao Wang, Xiaoguang Han, and 1 more authorIn SIGGRAPH Asia 2023 Conference Papers, Sydney, NSW, Australia, May 2023

SeamlessNeRF: Stitching Part NeRFs with Gradient PropagationBingchen Gong, Yuehao Wang, Xiaoguang Han, and 1 more authorIn SIGGRAPH Asia 2023 Conference Papers, Sydney, NSW, Australia, May 2023Neural Radiance Fields (NeRFs) have emerged as promising digital mediums of 3D objects and scenes, sparking a surge in research to extend the editing capabilities in this domain. The task of seamless editing and merging of multiple NeRFs, resembling the "Poisson blending" in 2D image editing, remains a critical operation that is under-explored by existing work. To fill this gap, we propose SeamlessNeRF, a novel approach for seamless appearance blending of multiple NeRFs. In specific, we aim to optimize the appearance of a target radiance field in order to harmonize its merge with a source field. We propose a well-tailored optimization procedure for blending, which is constrained by 1) pinning the radiance color in the intersecting boundary area between the source and target fields and 2) maintaining the original gradient of the target. Extensive experiments validate that our approach can effectively propagate the source appearance from the boundary area to the entire target field through the gradients. To the best of our knowledge, SeamlessNeRF is the first work that introduces gradient-guided appearance editing to radiance fields, offering solutions for seamless stitching of 3D objects represented in NeRFs.

@inproceedings{10.1145/3610548.3618238, author = {Gong, Bingchen and Wang, Yuehao and Han, Xiaoguang and Dou, Qi}, title = {SeamlessNeRF: Stitching Part NeRFs with Gradient Propagation}, year = {2023}, isbn = {9798400703157}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3610548.3618238}, doi = {10.1145/3610548.3618238}, booktitle = {SIGGRAPH Asia 2023 Conference Papers}, articleno = {33}, numpages = {10}, keywords = {seamless, gradient propagation, neural radiance fields, 3D editing, composition}, location = {Sydney, NSW, Australia}, series = {SA '23}, }

2022

- Meta-Fusion

Structure-Aware Meta-Fusion for Image Super-ResolutionHaoyu Ma, Bingchen Gong, and Yizhou YuACM Trans. Multimedia Comput. Commun. Appl., Feb 2022

Structure-Aware Meta-Fusion for Image Super-ResolutionHaoyu Ma, Bingchen Gong, and Yizhou YuACM Trans. Multimedia Comput. Commun. Appl., Feb 2022There are two main categories of image super-resolution algorithms: distortion oriented and perception oriented. Recent evidence shows that reconstruction accuracy and perceptual quality are typically in disagreement with each other. In this article, we present a new image super-resolution framework that is capable of striking a balance between distortion and perception. The core of our framework is a deep fusion network capable of generating a final high-resolution image by fusing a pair of deterministic and stochastic images using spatially varying weights. To make a single fusion model produce images with varying degrees of stochasticity, we further incorporate meta-learning into our fusion network. Once equipped with the kernel produced by a kernel prediction module, our meta fusion network is able to produce final images at any desired level of stochasticity. Experimental results indicate that our meta fusion network outperforms existing state-of-the-art SISR algorithms on widely used datasets, including PIRM-val, DIV2K-val, Set5, Set14, Urban100, Manga109, and B100. In addition, it is capable of producing high-resolution images that achieve low distortion and high perceptual quality simultaneously.

@article{10.1145/3477553, author = {Ma, Haoyu and Gong, Bingchen and Yu, Yizhou}, title = {Structure-Aware Meta-Fusion for Image Super-Resolution}, year = {2022}, issue_date = {May 2022}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {18}, number = {2}, issn = {1551-6857}, url = {https://doi.org/10.1145/3477553}, doi = {10.1145/3477553}, journal = {ACM Trans. Multimedia Comput. Commun. Appl.}, month = feb, articleno = {60}, numpages = {25}, keywords = {image fusion, Super-resolution, meta-learning}, }

2021

- ME-PCN

ME-PCN: Point Completion Conditioned on Mask EmptinessBingchen Gong, Yinyu Nie, Yiqun Lin, and 2 more authorsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Oct 2021

ME-PCN: Point Completion Conditioned on Mask EmptinessBingchen Gong, Yinyu Nie, Yiqun Lin, and 2 more authorsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Oct 2021Point completion refers to completing the missing geometries of an object from incomplete observations. Main-stream methods predict the missing shapes by decoding a global feature learned from the input point cloud, which often leads to deficient results in preserving topology consistency and surface details. In this work, we present ME-PCN, a point completion network that leverages ‘emptiness’ in 3D shape space. Given a single depth scan, previous methods often encode the occupied partial shapes while ignoring the empty regions (e.g. holes) in depth maps. In contrast, we argue that these ‘emptiness’ clues indicate shape boundaries that can be used to improve topology representation and detail granularity on surfaces. Specifically, our ME-PCN encodes both the occupied point cloud and the neighboring ‘empty points’. It estimates coarse-grained but complete and reasonable surface points in the first stage, followed by a refinement stage to produce fine-grained surface details. Comprehensive experiments verify that our ME-PCN presents better qualitative and quantitative performance against the state-of-the-art. Besides, we further prove that our ‘emptiness’ design is lightweight and easy to embed in existing methods, which shows consistent effectiveness in improving the CD and EMD scores.

@inproceedings{Gong_2021_ICCV, author = {Gong, Bingchen and Nie, Yinyu and Lin, Yiqun and Han, Xiaoguang and Yu, Yizhou}, title = {ME-PCN: Point Completion Conditioned on Mask Emptiness}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}, month = oct, year = {2021}, pages = {12488-12497}, doi = {10.1109/iccv48922.2021.01226}, url = {https://doi.org/10.1109%2Ficcv48922.2021.01226}, }

2018

- DTSN

Image Super-Resolution via Deterministic-Stochastic Synthesis and Local Statistical RectificationWeifeng Ge, Bingchen Gong, and Yizhou YuACM Trans. Graph., Dec 2018

Image Super-Resolution via Deterministic-Stochastic Synthesis and Local Statistical RectificationWeifeng Ge, Bingchen Gong, and Yizhou YuACM Trans. Graph., Dec 2018Single image superresolution has been a popular research topic in the last two decades and has recently received a new wave of interest due to deep neural networks. In this paper, we approach this problem from a different perspective. With respect to a downsampled low resolution image, we model a high resolution image as a combination of two components, a deterministic component and a stochastic component. The deterministic component can be recovered from the low-frequency signals in the downsampled image. The stochastic component, on the other hand, contains the signals that have little correlation with the low resolution image. We adopt two complementary methods for generating these two components. While generative adversarial networks are used for the stochastic component, deterministic component reconstruction is formulated as a regression problem solved using deep neural networks. Since the deterministic component exhibits clearer local orientations, we design novel loss functions tailored for such properties for training the deep regression network. These two methods are first applied to the entire input image to produce two distinct high-resolution images. Afterwards, these two images are fused together using another deep neural network that also performs local statistical rectification, which tries to make the local statistics of the fused image match the same local statistics of the groundtruth image. Quantitative results and a user study indicate that the proposed method outperforms existing state-of-the-art algorithms with a clear margin.

@article{10.1145/3272127.3275060, author = {Ge, Weifeng and Gong, Bingchen and Yu, Yizhou}, title = {Image Super-Resolution via Deterministic-Stochastic Synthesis and Local Statistical Rectification}, year = {2018}, issue_date = {December 2018}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {37}, number = {6}, issn = {0730-0301}, url = {https://doi.org/10.1145/3272127.3275060}, doi = {10.1145/3272127.3275060}, journal = {ACM Trans. Graph.}, month = dec, articleno = {260}, numpages = {14}, keywords = {local gram matrix, deep learning, image superresolution, deterministic component, stochastic component, local correlation matrix}, }

2016

- Tamp

Tamp: A Library for Compact Deep Neural Networks with Structured MatricesBingchen Gong, Brendan Jou, Felix Yu, and 1 more authorIn Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, Dec 2016

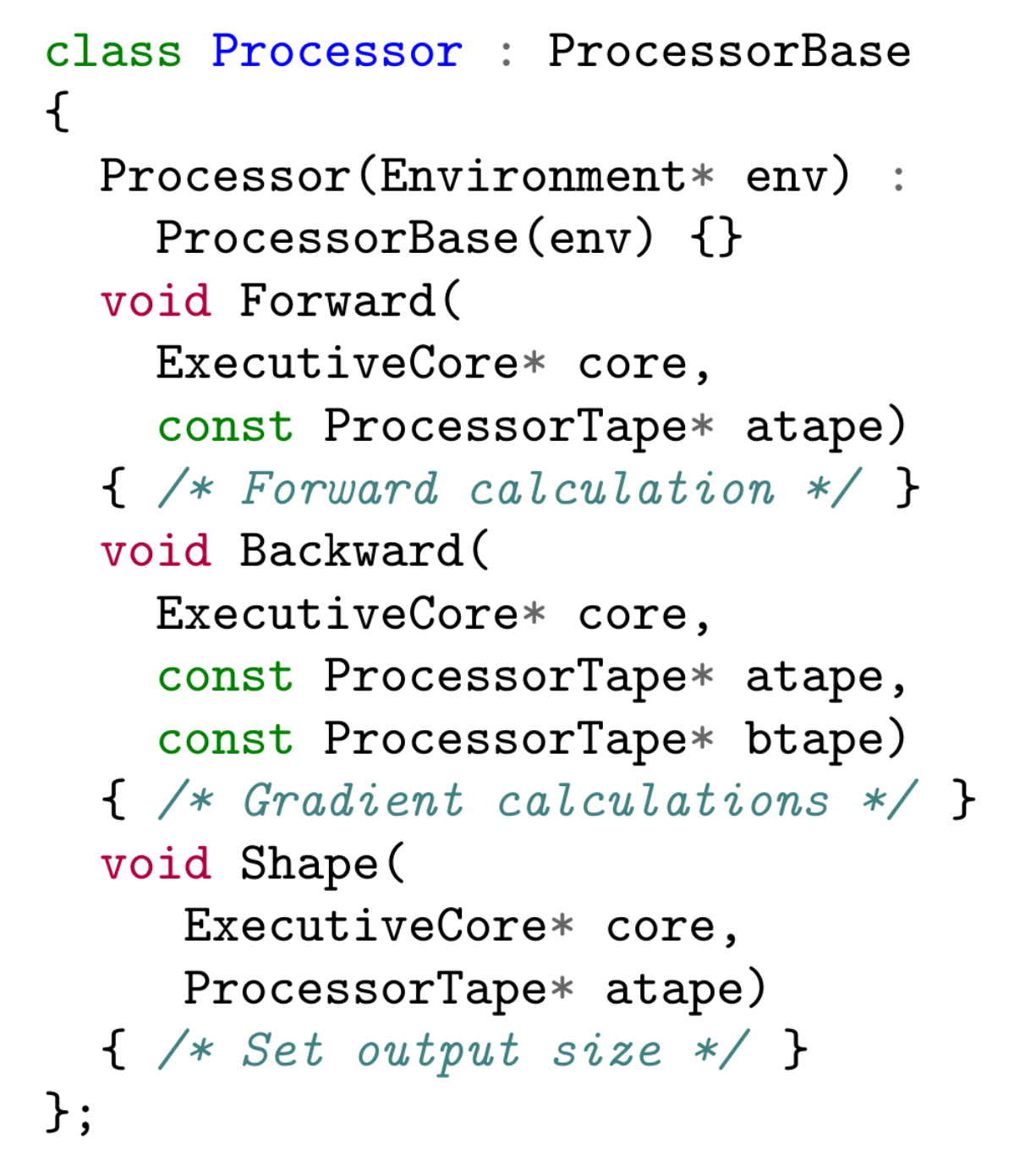

Tamp: A Library for Compact Deep Neural Networks with Structured MatricesBingchen Gong, Brendan Jou, Felix Yu, and 1 more authorIn Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, Dec 2016We introduce Tamp, an open source C++ library for reducing the space and time costs of deep neural network models. In particular, Tamp implements several recent works which use structured matrices to replace unstructured matrices which are often bottlenecks in neural networks.Tamp is also designed to serve as a unified development platform with several supported optimization back-ends and abstracted data types.This paper introduces the design and API and also demonstrates the effectiveness with experiments on public datasets.

@inproceedings{10.1145/2964284.2973802, author = {Gong, Bingchen and Jou, Brendan and Yu, Felix and Chang, Shih-Fu}, title = {Tamp: A Library for Compact Deep Neural Networks with Structured Matrices}, year = {2016}, isbn = {9781450336031}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/2964284.2973802}, doi = {10.1145/2964284.2973802}, booktitle = {Proceedings of the 24th ACM International Conference on Multimedia}, pages = {1206–1209}, numpages = {4}, keywords = {open source, neural networks, structured matrices}, location = {Amsterdam, The Netherlands}, series = {MM '16}, }

2015

- Audeosynth

Audeosynth: Music-Driven Video MontageZicheng Liao, Yizhou Yu, Bingchen Gong, and 1 more authorACM Trans. Graph., Jul 2015

Audeosynth: Music-Driven Video MontageZicheng Liao, Yizhou Yu, Bingchen Gong, and 1 more authorACM Trans. Graph., Jul 2015We introduce music-driven video montage, a media format that offers a pleasant way to browse or summarize video clips collected from various occasions, including gatherings and adventures. In music-driven video montage, the music drives the composition of the video content. According to musical movement and beats, video clips are organized to form a montage that visually reflects the experiential properties of the music. Nonetheless, it takes enormous manual work and artistic expertise to create it. In this paper, we develop a framework for automatically generating music-driven video montages. The input is a set of video clips and a piece of background music. By analyzing the music and video content, our system extracts carefully designed temporal features from the input, and casts the synthesis problem as an optimization and solves the parameters through Markov Chain Monte Carlo sampling. The output is a video montage whose visual activities are cut and synchronized with the rhythm of the music, rendering a symphony of audio-visual resonance.

@article{10.1145/2766966, author = {Liao, Zicheng and Yu, Yizhou and Gong, Bingchen and Cheng, Lechao}, title = {Audeosynth: Music-Driven Video Montage}, year = {2015}, issue_date = {August 2015}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {34}, number = {4}, issn = {0730-0301}, url = {https://doi.org/10.1145/2766966}, doi = {10.1145/2766966}, journal = {ACM Trans. Graph.}, month = jul, articleno = {68}, numpages = {10}, keywords = {audio-visual synchresis}, }